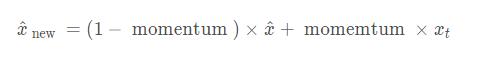

1. 注意momentum的定義

Pytorch中的BN層的動(dòng)量平滑和常見(jiàn)的動(dòng)量法計(jì)算方式是相反的,默認(rèn)的momentum=0.1

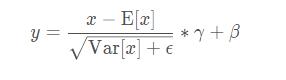

BN層里的表達(dá)式為:

其中γ和β是可以學(xué)習(xí)的參數(shù)。在Pytorch中,BN層的類(lèi)的參數(shù)有:

CLASS torch.nn.BatchNorm2d(num_features, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

每個(gè)參數(shù)具體含義參見(jiàn)文檔,需要注意的是,affine定義了BN層的參數(shù)γ和β是否是可學(xué)習(xí)的(不可學(xué)習(xí)默認(rèn)是常數(shù)1和0).

2. 注意BN層中含有統(tǒng)計(jì)數(shù)據(jù)數(shù)值,即均值和方差

track_running_stats – a boolean value that when set to True, this module tracks the running mean and variance, and when set to False, this module does not track such statistics and always uses batch statistics in both training and eval modes. Default: True

在訓(xùn)練過(guò)程中model.train(),train過(guò)程的BN的統(tǒng)計(jì)數(shù)值—均值和方差是通過(guò)當(dāng)前batch數(shù)據(jù)估計(jì)的。

并且測(cè)試時(shí),model.eval()后,若track_running_stats=True,模型此刻所使用的統(tǒng)計(jì)數(shù)據(jù)是Running status 中的,即通過(guò)指數(shù)衰減規(guī)則,積累到當(dāng)前的數(shù)值。否則依然使用基于當(dāng)前batch數(shù)據(jù)的估計(jì)值。

3. BN層的統(tǒng)計(jì)數(shù)據(jù)更新

是在每一次訓(xùn)練階段model.train()后的forward()方法中自動(dòng)實(shí)現(xiàn)的,而不是在梯度計(jì)算與反向傳播中更新optim.step()中完成

4. 凍結(jié)BN及其統(tǒng)計(jì)數(shù)據(jù)

從上面的分析可以看出來(lái),正確的凍結(jié)BN的方式是在模型訓(xùn)練時(shí),把BN單獨(dú)挑出來(lái),重新設(shè)置其狀態(tài)為eval (在model.train()之后覆蓋training狀態(tài)).

解決方案:

You should use apply instead of searching its children, while named_children() doesn't iteratively search submodules.

def set_bn_eval(m):

classname = m.__class__.__name__

if classname.find('BatchNorm') != -1:

m.eval()

model.apply(set_bn_eval)

或者,重寫(xiě)module中的train()方法:

def train(self, mode=True):

"""

Override the default train() to freeze the BN parameters

"""

super(MyNet, self).train(mode)

if self.freeze_bn:

print("Freezing Mean/Var of BatchNorm2D.")

if self.freeze_bn_affine:

print("Freezing Weight/Bias of BatchNorm2D.")

if self.freeze_bn:

for m in self.backbone.modules():

if isinstance(m, nn.BatchNorm2d):

m.eval()

if self.freeze_bn_affine:

m.weight.requires_grad = False

m.bias.requires_grad = False

5. Fix/frozen Batch Norm when training may lead to RuntimeError: expected scalar type Half but found Float

解決辦法:

import torch

import torch.nn as nn

from torch.nn import init

from torchvision import models

from torch.autograd import Variable

from apex.fp16_utils import *

def fix_bn(m):

classname = m.__class__.__name__

if classname.find('BatchNorm') != -1:

m.eval()

model = models.resnet50(pretrained=True)

model.cuda()

model = network_to_half(model)

model.train()

model.apply(fix_bn) # fix batchnorm

input = Variable(torch.FloatTensor(8, 3, 224, 224).cuda().half())

output = model(input)

output_mean = torch.mean(output)

output_mean.backward()

Please do

def fix_bn(m):

classname = m.__class__.__name__

if classname.find('BatchNorm') != -1:

m.eval().half()

Reason for this is, for regular training it is better (performance-wise) to use cudnn batch norm, which requires its weights to be in fp32, thus batch norm modules are not converted to half in network_to_half. However, cudnn does not support batchnorm backward in the eval mode , which is what you are doing, and to use pytorch implementation for this, weights have to be of the same type as inputs.

補(bǔ)充:深度學(xué)習(xí)總結(jié):用pytorch做dropout和Batch Normalization時(shí)需要注意的地方,用tensorflow做dropout和BN時(shí)需要注意的地方

用pytorch做dropout和BN時(shí)需要注意的地方

pytorch做dropout:

就是train的時(shí)候使用dropout,訓(xùn)練的時(shí)候不使用dropout,

pytorch里面是通過(guò)net.eval()固定整個(gè)網(wǎng)絡(luò)參數(shù),包括不會(huì)更新一些前向的參數(shù),沒(méi)有dropout,BN參數(shù)固定,理論上對(duì)所有的validation set都要使用net.eval()

net.train()表示會(huì)納入梯度的計(jì)算。

net_dropped = torch.nn.Sequential(

torch.nn.Linear(1, N_HIDDEN),

torch.nn.Dropout(0.5), # drop 50% of the neuron

torch.nn.ReLU(),

torch.nn.Linear(N_HIDDEN, N_HIDDEN),

torch.nn.Dropout(0.5), # drop 50% of the neuron

torch.nn.ReLU(),

torch.nn.Linear(N_HIDDEN, 1),

)

for t in range(500):

pred_drop = net_dropped(x)

loss_drop = loss_func(pred_drop, y)

optimizer_drop.zero_grad()

loss_drop.backward()

optimizer_drop.step()

if t % 10 == 0:

# change to eval mode in order to fix drop out effect

net_dropped.eval() # parameters for dropout differ from train mode

test_pred_drop = net_dropped(test_x)

# change back to train mode

net_dropped.train()

pytorch做Batch Normalization:

net.eval()固定整個(gè)網(wǎng)絡(luò)參數(shù),固定BN的參數(shù),moving_mean 和moving_var,不懂這個(gè)看下圖:

if self.do_bn:

bn = nn.BatchNorm1d(10, momentum=0.5)

setattr(self, 'bn%i' % i, bn) # IMPORTANT set layer to the Module

self.bns.append(bn)

for epoch in range(EPOCH):

print('Epoch: ', epoch)

for net, l in zip(nets, losses):

net.eval() # set eval mode to fix moving_mean and moving_var

pred, layer_input, pre_act = net(test_x)

net.train() # free moving_mean and moving_var

plot_histogram(*layer_inputs, *pre_acts)

moving_mean 和moving_var

用tensorflow做dropout和BN時(shí)需要注意的地方

dropout和BN都有一個(gè)training的參數(shù)表明到底是train還是test, 表明test那dropout就是不dropout,BN就是固定住了BN的參數(shù);

tf_is_training = tf.placeholder(tf.bool, None) # to control dropout when training and testing

# dropout net

d1 = tf.layers.dense(tf_x, N_HIDDEN, tf.nn.relu)

d1 = tf.layers.dropout(d1, rate=0.5, training=tf_is_training) # drop out 50% of inputs

d2 = tf.layers.dense(d1, N_HIDDEN, tf.nn.relu)

d2 = tf.layers.dropout(d2, rate=0.5, training=tf_is_training) # drop out 50% of inputs

d_out = tf.layers.dense(d2, 1)

for t in range(500):

sess.run([o_train, d_train], {tf_x: x, tf_y: y, tf_is_training: True}) # train, set is_training=True

if t % 10 == 0:

# plotting

plt.cla()

o_loss_, d_loss_, o_out_, d_out_ = sess.run(

[o_loss, d_loss, o_out, d_out], {tf_x: test_x, tf_y: test_y, tf_is_training: False} # test, set is_training=False

)

# pytorch

def add_layer(self, x, out_size, ac=None):

x = tf.layers.dense(x, out_size, kernel_initializer=self.w_init, bias_initializer=B_INIT)

self.pre_activation.append(x)

# the momentum plays important rule. the default 0.99 is too high in this case!

if self.is_bn: x = tf.layers.batch_normalization(x, momentum=0.4, training=tf_is_train) # when have BN

out = x if ac is None else ac(x)

return out

當(dāng)BN的training的參數(shù)為train時(shí),只是表示BN的參數(shù)是可變化的,并不是代表BN會(huì)自己更新moving_mean 和moving_var,因?yàn)檫@個(gè)操作是前向更新的op,在做train之前必須確保moving_mean 和moving_var更新了,更新moving_mean 和moving_var的操作在tf.GraphKeys.UPDATE_OPS

# !! IMPORTANT !! the moving_mean and moving_variance need to be updated,

# pass the update_ops with control_dependencies to the train_op

update_ops = tf.get_collection(tf.GraphKeys.UPDATE_OPS)

with tf.control_dependencies(update_ops):

self.train = tf.train.AdamOptimizer(LR).minimize(self.loss)

以上為個(gè)人經(jīng)驗(yàn),希望能給大家一個(gè)參考,也希望大家多多支持腳本之家。

您可能感興趣的文章:- pytorch的batch normalize使用詳解

- 踩坑:pytorch中eval模式下結(jié)果遠(yuǎn)差于train模式介紹

- pytorch掉坑記錄:model.eval的作用說(shuō)明