項目環境:python3.6

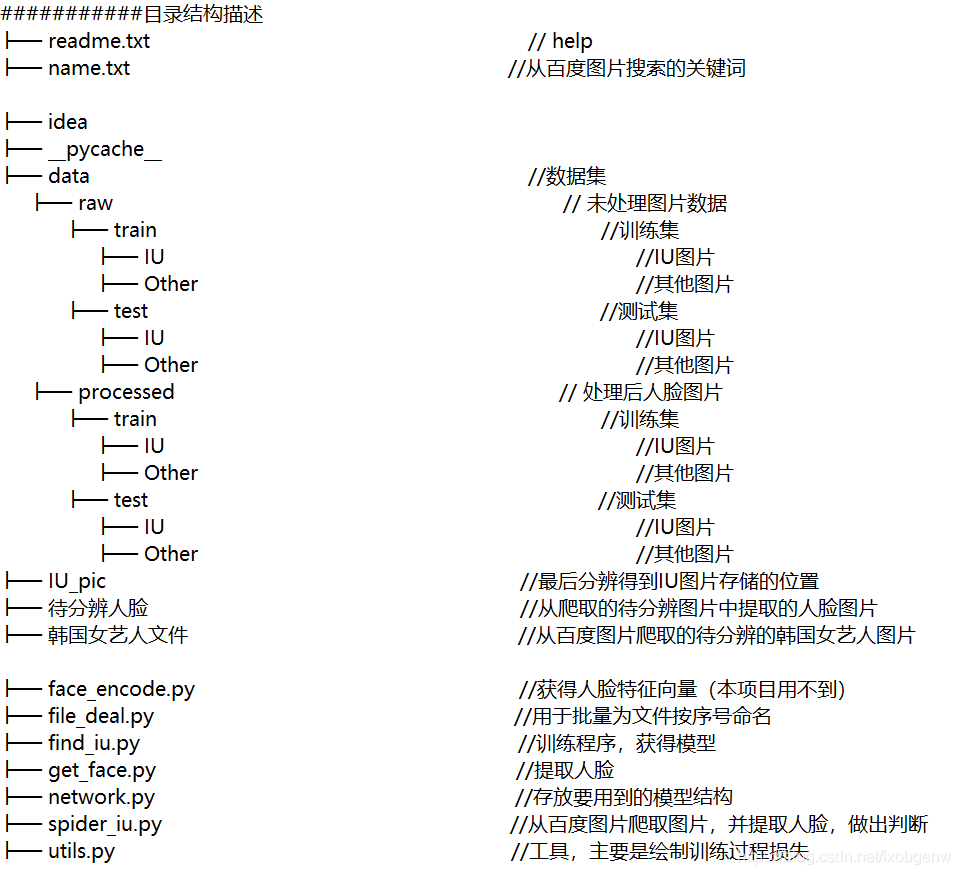

一、項目結構

二、數據集準備

數據集準備分為兩步:

- 獲取圖片.

- 提取人臉.

1、獲取圖片

首先可以利用爬蟲,從百度圖片上批量下載圖片,但注意下載數據集所用的關鍵詞不要和之后識別任務的關鍵詞太接近,否則若有圖片重合,就會產生“識別得很準”的錯覺。下面的程序為爬蟲部分,在name.txt文件中寫好要搜索的關鍵詞,即可使用。

# 爬蟲部分,存放到 name + ‘文件'

#############################################################################################

if GET_PIC == 1:

headers = {

'Accept-Language': 'zh-CN,zh;q=0.8,zh-TW;q=0.7,zh-HK;q=0.5,en-US;q=0.3,en;q=0.2',

'Connection': 'keep-alive',

'User-Agent': 'Mozilla/5.0 (X11; Linux x86_64; rv:60.0) Gecko/20100101 Firefox/60.0',

'Upgrade-Insecure-Requests': '1'

}

A = requests.Session()

A.headers = headers

tm = int(input('請輸入每類圖片的下載數量 '))

numPicture = tm

line_list = []

with open('./name.txt', encoding='utf-8') as file:

line_list = [k.strip() for k in file.readlines()] # 用 strip()移除末尾的空格

for word in line_list:

url = 'https://image.baidu.com/search/flip?tn=baiduimageie=utf-8word=' + word + 'pn='

tot = Find(url, A)

Recommend = recommend(url) # 記錄相關推薦

print('經過檢測%s類圖片共有%d張' % (word, tot))

file = word + '文件'

y = os.path.exists(file)

if y == 1:

print('該文件已存在,無需創建')

else:

os.mkdir(file)

t = 0

tmp = url

while t numPicture:

try:

url = tmp + str(t)

# result = requests.get(url, timeout=10)

# 這里搞了下

result = A.get(url, timeout=10, allow_redirects=False)

print(url)

except error.HTTPError as e:

print('網絡錯誤,請調整網絡后重試')

t = t + 60

else:

dowmloadPicture(result.text, word)

t = t + 60

numPicture = numPicture + tm

print('當前搜索結束,開始提取人臉')

#############################################################################################

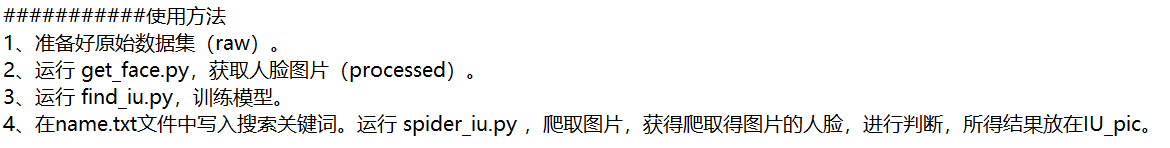

下載圖片時要注意區分,將IU的圖片放在一個文件夾下,Other的放在另一文件夾下。訓練集和測試集都要如此。如下圖所示:

每個文件夾內都是下圖形式:

在IU文件夾內圖片如下所示:

對于文件夾內文件的命名,可以利用以下這段程序,按順序重命名。

import os

raw_train_root_1 = 'E:/Table/學習數據集/find_iu/data/raw/train/IU/'

raw_train_root_2 = 'E:/Table/學習數據集/find_iu/data/raw/train/Other/'

raw_test_root_1 = 'E:/Table/學習數據集/find_iu/data/raw/test/IU/'

raw_test_root_2 = 'E:/Table/學習數據集/find_iu/data/raw/test/Other/'

raw_roots = [raw_train_root_1, raw_train_root_2, raw_test_root_1, raw_test_root_2]

for path in raw_roots:

# 獲取該目錄下所有文件,存入列表中

fileList = os.listdir(path)

n = 0

for i in fileList:

# 設置舊文件名(就是路徑+文件名)

oldname = path + os.sep + fileList[n] # os.sep添加系統分隔符

# 設置新文件名

newname = path + os.sep + str(n) + '.JPG'

os.rename(oldname, newname) # 用os模塊中的rename方法對文件改名

print(oldname, '======>', newname)

n += 1

2.提取人臉

提取人臉,需要用到一個人臉識別庫face_recognition庫。face_recognition庫的下載步驟參考:

https://www.jb51.net/article/209870.htm

主要有三步,可以直接在anaconda的命令行界面復制使用:

- pip install CMake -i https://pypi.douban.com/simple

- pip install dlib==19.7.0 -i https://pypi.douban.com/simple

- pip install face_recognition -i https://pypi.douban.com/simple

筆者已嘗試,確實可用。

使用下述的函數就可以獲得一張圖片對應的人臉,返回值就是人臉圖片。

# 找到圖片中的人臉

#############################################################################################

def find_face(path):

# Load the jpg file into a numpy array

image = face_recognition.load_image_file(path)

# Find all the faces in the image using the default HOG-based model.

# This method is fairly accurate, but not as accurate as the CNN model and not GPU accelerated.

# See also: find_faces_in_picture_cnn.py

face_locations = face_recognition.face_locations(image) # 可以選擇 model="cnn"

if len(face_locations) == 0:

return None

else:

for face_location in face_locations:

# Print the location of each face in this image

top, right, bottom, left = face_location

# You can access the actual face itself like this:

face_image = image[top:bottom, left:right]

pil_image = Image.fromarray(face_image)

return pil_image

#############################################################################################

對數據集進行操作之后,就可以獲得處理后的人臉圖片。之所以不用人物圖訓練,而是提取出人臉后再進行訓練,是考慮到人物圖像中干擾因素太多,且經過試驗后發現識別的效果非常差,于是加入這個提取人臉的環節。對數據集的操作代碼如下:

# 將訓練集和測試集中的raw圖片處理,提取出人臉圖片

#############################################################################################

if __name__ == '__main__': # 主函數入口

raw_train_root_1 = 'E:/Table/學習數據集/find_iu/data/raw/train/IU/'

raw_train_root_2 = 'E:/Table/學習數據集/find_iu/data/raw/train/Other/'

raw_test_root_1 = 'E:/Table/學習數據集/find_iu/data/raw/test/IU/'

raw_test_root_2 = 'E:/Table/學習數據集/find_iu/data/raw/test/Other/'

raw_roots = [raw_train_root_1, raw_train_root_2, raw_test_root_1, raw_test_root_2]

img_raw_train_1 = os.listdir(raw_train_root_1)

img_raw_train_2 = os.listdir(raw_train_root_2)

img_raw_test_1 = os.listdir(raw_test_root_1)

img_raw_test_2 = os.listdir(raw_test_root_2)

img_raws = [img_raw_train_1, img_raw_train_2, img_raw_test_1, img_raw_test_2]

new_path_train_1 = 'E:/Table/學習數據集/find_iu/data/processed/train/IU/'

new_path_train_2 = 'E:/Table/學習數據集/find_iu/data/processed/train/Other/'

new_path_test_1 = 'E:/Table/學習數據集/find_iu/data/processed/test/IU/'

new_path_test_2 = 'E:/Table/學習數據集/find_iu/data/processed/test/Other/'

new_paths = [new_path_train_1, new_path_train_2, new_path_test_1, new_path_test_2]

for raw_root, img_raw, new_path in zip(raw_roots, img_raws, new_paths):

n = 0

for i in range(len(img_raw)):

try:

img = Image.open(raw_root + img_raw[i])

except:

print('a file error, continue')

continue

else:

img_train = find_face(raw_root + img_raw[i])

if img_train == None:

continue

else:

# img_train.save(new_path + '%d.JPG'%n)

# print(raw_root + img_raw[i])

n += 1

print('在%d張圖片中,共找到%d張臉' % (len(img_raw), n))

#############################################################################################

處理前的圖片數據均存放在raw文件夾中,處理后的存放在processed文件夾中,如下圖:

兩個文件夾的內部結構完全一樣:

三、網絡模型

1、圖像處理

將圖片裁剪為112×92大小,使用RGB圖像,(這里試過用灰度圖像,但好像效果不會更好,就放棄了),在對圖片進行歸一化處理。

data_transform = transforms.Compose([

# transforms.Grayscale(num_output_channels=1), # 彩色圖像轉灰度圖像num_output_channels默認1

transforms.Resize(112),

transforms.CenterCrop((112, 92)), # 中心裁剪為112*92

transforms.ToTensor(),

transforms.Normalize(mean=[0.5, 0.5, 0.5], std=[0.5, 0.5, 0.5])

# transforms.Normalize(mean=0.5, std=0.5)

])

使用孿生神經網絡(Siamese Network)

class SiameNetwork(nn.Module):

def __init__(self):

super(SiameNetwork, self).__init__()

# input: h=112, w=92

self.conv1 = torch.nn.Sequential(

torch.nn.Conv2d(in_channels=3, # 輸入單通道

out_channels=16, # 16個3*3卷積核

kernel_size=3, # 卷積核尺寸

stride=2, # 卷積核滑動步長, 1的話圖片大小不變,2的話會大小會變為(h/2)*(w/2)

padding=1), # 邊緣填充大小,如果要保持原大小,kernel_size//2

torch.nn.BatchNorm2d(16), # 標準化,前面卷積后有16個圖層

torch.nn.ReLU() # 激活函數

) # output: h=56, w=46

self.conv2 = torch.nn.Sequential(

torch.nn.Conv2d(16, 32, 3, 2, 1),

torch.nn.BatchNorm2d(32),

torch.nn.ReLU()

) # output: h=28, w=23

self.conv3 = torch.nn.Sequential(

torch.nn.Conv2d(32, 64, 3, 2, 1),

torch.nn.BatchNorm2d(64),

torch.nn.ReLU()

) # output: h=14, w=12

self.conv4 = torch.nn.Sequential(

torch.nn.Conv2d(64, 64, 2, 2, 0),

torch.nn.BatchNorm2d(64),

torch.nn.ReLU()

) # output: h=7, w=6

self.mlp1 = torch.nn.Linear(7 * 6 * 64, 100) # 需要計算conv4的輸出尺寸,每次卷積的輸出尺寸(size - kernal + 2*padding)/stride + 1

self.mlp2 = torch.nn.Linear(100, 10)

def forward(self, x):

x = self.conv1(x)

x = self.conv2(x)

x = self.conv3(x)

x = self.conv4(x)

x = self.mlp1(x.view(x.size(0), -1)) # view展平

x = self.mlp2(x)

return x

四、具體代碼

1.get_face.py

from PIL import Image

import face_recognition

import os

# 找到圖片中的人臉

#############################################################################################

def find_face(path):

# Load the jpg file into a numpy array

image = face_recognition.load_image_file(path)

# Find all the faces in the image using the default HOG-based model.

# This method is fairly accurate, but not as accurate as the CNN model and not GPU accelerated.

# See also: find_faces_in_picture_cnn.py

face_locations = face_recognition.face_locations(image) # 可以選擇 model="cnn"

if len(face_locations) == 0:

return None

else:

for face_location in face_locations:

# Print the location of each face in this image

top, right, bottom, left = face_location

# You can access the actual face itself like this:

face_image = image[top:bottom, left:right]

pil_image = Image.fromarray(face_image)

return pil_image

#############################################################################################

# 將訓練集和測試集中的raw圖片處理,提取出人臉圖片

#############################################################################################

if __name__ == '__main__': # 主函數入口

raw_train_root_1 = 'E:/Table/學習數據集/find_iu/data/raw/train/IU/'

raw_train_root_2 = 'E:/Table/學習數據集/find_iu/data/raw/train/Other/'

raw_test_root_1 = 'E:/Table/學習數據集/find_iu/data/raw/test/IU/'

raw_test_root_2 = 'E:/Table/學習數據集/find_iu/data/raw/test/Other/'

raw_roots = [raw_train_root_1, raw_train_root_2, raw_test_root_1, raw_test_root_2]

img_raw_train_1 = os.listdir(raw_train_root_1)

img_raw_train_2 = os.listdir(raw_train_root_2)

img_raw_test_1 = os.listdir(raw_test_root_1)

img_raw_test_2 = os.listdir(raw_test_root_2)

img_raws = [img_raw_train_1, img_raw_train_2, img_raw_test_1, img_raw_test_2]

new_path_train_1 = 'E:/Table/學習數據集/find_iu/data/processed/train/IU/'

new_path_train_2 = 'E:/Table/學習數據集/find_iu/data/processed/train/Other/'

new_path_test_1 = 'E:/Table/學習數據集/find_iu/data/processed/test/IU/'

new_path_test_2 = 'E:/Table/學習數據集/find_iu/data/processed/test/Other/'

new_paths = [new_path_train_1, new_path_train_2, new_path_test_1, new_path_test_2]

for raw_root, img_raw, new_path in zip(raw_roots, img_raws, new_paths):

n = 0

for i in range(len(img_raw)):

try:

img = Image.open(raw_root + img_raw[i])

except:

print('a file error, continue')

continue

else:

img_train = find_face(raw_root + img_raw[i])

if img_train == None:

continue

else:

# img_train.save(new_path + '%d.JPG'%n)

# print(raw_root + img_raw[i])

n += 1

print('在%d張圖片中,共找到%d張臉' % (len(img_raw), n))

#############################################################################################

2.find_iu.py

import torch

import torchvision

import torch.nn as nn

from torch.autograd import Variable

from torchvision import datasets, transforms

from torch.utils.data import DataLoader

import cv2 #opencv庫,用于圖片可視化

import numpy as np

import os

from utils import draw_result

from network import SiameNetwork

from get_face import find_face

if __name__ == '__main__': # 主函數入口

# 設置參數

#############################################################################################

path = 'E:/Table/學習數據集/find_iu/result/' # 存放和生成結果的路徑標志

epochs = 20 #訓練周期

BATCH_SIZE = 16 #批量樣本大小

NUM_WORKERS = 0

#############################################################################################

# 數據處理

#############################################################################################

data_transform = transforms.Compose([

# transforms.Grayscale(num_output_channels=1), # 彩色圖像轉灰度圖像num_output_channels默認1

transforms.Resize(112),

transforms.CenterCrop((112, 92)), # 中心裁剪為112*92

transforms.ToTensor(),

transforms.Normalize(mean=[0.5, 0.5, 0.5], std=[0.5, 0.5, 0.5])

# transforms.Normalize(mean=0.5, std=0.5)

])

train_dataset = datasets.ImageFolder(root = r'E:/Table/學習數據集/find_iu/data/processed/train',

transform = data_transform)

test_dataset = datasets.ImageFolder(root = r'E:/Table/學習數據集/find_iu/data/processed/test',

transform = data_transform)

train_loader = DataLoader(train_dataset, batch_size=BATCH_SIZE, shuffle=True, num_workers=NUM_WORKERS)

test_loader = DataLoader(test_dataset, batch_size=BATCH_SIZE, shuffle=True, num_workers=NUM_WORKERS)

image, labels = next(iter(train_loader)) #數據可視化

img = torchvision.utils.make_grid(image, nrow = 10)

img = img.numpy().transpose(1, 2, 0)

cv2.imshow('img', img) #展示圖像

cv2.waitKey(0) #按下任一按鍵后開始工作

print("data ready!")

#############################################################################################

#配置設備、損失函數和優化器

#############################################################################################

device = torch.device('cuda')

model = SiameNetwork().to(device)

cost = torch.nn.CrossEntropyLoss() #定義損失函數,使用交叉熵

optimizer = torch.optim.Adam(model.parameters(), lr=0.0008, weight_decay=0.001) #Adam優化器

print("device ready!")

#############################################################################################

#訓練過程,訓練周期由epochs決定

#############################################################################################

draw_epoch = [] #記錄訓練階段

draw_loss = [] #記錄訓練損失,用于繪制

draw_train_acc = [] #記錄訓練準確度,用于繪制

draw_val_loss = [] #記錄測試損失,用于繪制

draw_val_acc = [] # 記錄測試準確度,用于繪制

for epoch in range(epochs):

#訓練過程

sum_loss = 0.0

sum_val_loss = 0.0

train_correct = 0

test_correct = 0

for data in train_loader:

inputs,labels = data

inputs,labels = Variable(inputs).cuda(),Variable(labels).cuda()

optimizer.zero_grad() #將上一batch梯度清零

outputs = model(inputs)

loss = cost(outputs, labels)

loss.backward() #反向傳播

optimizer.step()

_, id = torch.max(outputs.data, 1)

sum_loss += loss.data

train_correct += torch.sum(id == labels.data)

for data in test_loader: # 模型測試

inputs,labels = data

inputs,labels = Variable(inputs).cuda(),Variable(labels).cuda()

outputs = model(inputs)

val_loss = cost(outputs, labels)

_,id = torch.max(outputs.data, 1)

sum_val_loss += val_loss.data

test_correct += torch.sum(id == labels.data)

print('[%d,%d] train loss:%.03f train acc:%.03f%%'

%(epoch + 1, epochs, sum_loss / len(train_loader), (100 * train_correct / len(train_dataset))))

print(' val loss:%.03f val acc:%.03f%%'

%(sum_val_loss / len(test_loader), (100 * test_correct / len(test_dataset))))

draw_epoch.append(epoch+1) # 用于后續畫圖的數據

draw_loss.append(sum_loss / len(train_loader))

draw_train_acc.append(100 * train_correct / len(train_dataset))

draw_val_loss.append(sum_val_loss / len(test_loader))

draw_val_acc.append(100 * test_correct / len(test_dataset))

np.savetxt('%s/train_loss.txt'%(path), draw_loss, fmt="%.3f") # 保存損失數據

np.savetxt('%s/train_acc.txt'%(path), draw_train_acc, fmt="%.3f") # 保存準確率數據

np.savetxt('%s/val_loss.txt'%(path), draw_val_loss, fmt="%.3f") # 保存損失數據

np.savetxt('%s/val_acc.txt'%(path), draw_val_acc, fmt="%.3f") # 保存準確率數據

print("train ready!")

#############################################################################################

#數據可視化

#############################################################################################

draw_result(draw_epoch, path) # 繪圖函數

print("draw ready!")

#############################################################################################

#模型的存儲和載入

#############################################################################################

torch.save(model.state_dict(), "parameter.pkl") #save

print("save ready!")

#############################################################################################

3.spider_iu.py

import re

import requests

from urllib import error

from bs4 import BeautifulSoup

import os

import torch

from torch.autograd import Variable

from torchvision import datasets, transforms

from torch.utils.data import DataLoader

from network import SiameNetwork

from utils import cv_imread

import cv2

from PIL import Image

import shutil

from get_face import find_face

# 設置參數

#############################################################################################

GET_PIC = 0 # 1 執行這步,0 不執行

GET_FACE = 0

GET_IU = 1

#############################################################################################

num = 0

numPicture = 0

file = ''

List = []

# 爬蟲所用函數

#############################################################################################

def Find(url, A):

global List

print('正在檢測圖片總數,請稍等.....')

t = 0

i = 1

s = 0

while t 1000:

Url = url + str(t)

try:

# 這里搞了下

Result = A.get(Url, timeout=7, allow_redirects=False)

except BaseException:

t = t + 60

continue

else:

result = Result.text

pic_url = re.findall('"objURL":"(.*?)",', result, re.S) # 先利用正則表達式找到圖片url

s += len(pic_url)

if len(pic_url) == 0:

break

else:

List.append(pic_url)

t = t + 60

return s

def recommend(url):

Re = []

try:

html = requests.get(url, allow_redirects=False)

except error.HTTPError as e:

return

else:

html.encoding = 'utf-8'

bsObj = BeautifulSoup(html.text, 'html.parser')

div = bsObj.find('div', id='topRS')

if div is not None:

listA = div.findAll('a')

for i in listA:

if i is not None:

Re.append(i.get_text())

return Re

def dowmloadPicture(html, keyword):

global num

# t =0

pic_url = re.findall('"objURL":"(.*?)",', html, re.S) # 先利用正則表達式找到圖片url

print('找到關鍵詞:' + keyword + '的圖片,即將開始下載圖片...')

for each in pic_url:

print('正在下載第' + str(num + 1) + '張圖片,圖片地址:' + str(each))

try:

if each is not None:

pic = requests.get(each, timeout=7)

else:

continue

except BaseException:

print('錯誤,當前圖片無法下載')

continue

else:

string = file + r'\\' + keyword + '_' + str(num) + '.jpg'

fp = open(string, 'wb')

fp.write(pic.content)

fp.close()

num += 1

if num >= numPicture:

return

#############################################################################################

if __name__ == '__main__': # 主函數入口

# 爬蟲部分,存放到 name + ‘文件'

#############################################################################################

if GET_PIC == 1:

headers = {

'Accept-Language': 'zh-CN,zh;q=0.8,zh-TW;q=0.7,zh-HK;q=0.5,en-US;q=0.3,en;q=0.2',

'Connection': 'keep-alive',

'User-Agent': 'Mozilla/5.0 (X11; Linux x86_64; rv:60.0) Gecko/20100101 Firefox/60.0',

'Upgrade-Insecure-Requests': '1'

}

A = requests.Session()

A.headers = headers

tm = int(input('請輸入每類圖片的下載數量 '))

numPicture = tm

line_list = []

with open('./name.txt', encoding='utf-8') as file:

line_list = [k.strip() for k in file.readlines()] # 用 strip()移除末尾的空格

for word in line_list:

url = 'https://image.baidu.com/search/flip?tn=baiduimageie=utf-8word=' + word + 'pn='

tot = Find(url, A)

Recommend = recommend(url) # 記錄相關推薦

print('經過檢測%s類圖片共有%d張' % (word, tot))

file = word + '文件'

y = os.path.exists(file)

if y == 1:

print('該文件已存在,無需創建')

else:

os.mkdir(file)

t = 0

tmp = url

while t numPicture:

try:

url = tmp + str(t)

# result = requests.get(url, timeout=10)

# 這里搞了下

result = A.get(url, timeout=10, allow_redirects=False)

print(url)

except error.HTTPError as e:

print('網絡錯誤,請調整網絡后重試')

t = t + 60

else:

dowmloadPicture(result.text, word)

t = t + 60

numPicture = numPicture + tm

print('當前搜索結束,開始提取人臉')

#############################################################################################

# 將訓練集和測試集中的raw圖片處理,提取出人臉圖片,從file+'文件'到‘待分辨人臉'

############################################################################################

if GET_FACE == 1:

if GET_PIC == 0:

file = '韓國女藝人文件'

raw_root = 'E:/Table/學習數據集/find_iu/'+ file + '/'

img_raw = os.listdir(raw_root)

new_path = 'E:/Table/學習數據集/find_iu/待分辨人臉/'

n = 0

for i in range(len(img_raw)):

try:

img = Image.open(raw_root + img_raw[i])

except:

print('a file error, continue')

continue

else:

img_train = find_face(raw_root + img_raw[i])

if img_train == None:

continue

else:

img_train.save(new_path + '%d.JPG' % n)

print(raw_root + img_raw[i])

n += 1

print('在%d張圖片中,共找到%d張臉' % (len(img_raw), n))

print('提取人臉結束,開始尋找IU')

#############################################################################################

# 開始判別,從'待分辨人臉‘中找出IU存放到'IU_pic‘

#############################################################################################

if GET_IU == 1:

data_transform = transforms.Compose([

# transforms.Grayscale(num_output_channels=1), # 彩色圖像轉灰度圖像num_output_channels默認1

transforms.Resize(112),

transforms.CenterCrop((112, 92)), # 中心裁剪為112*92

transforms.ToTensor(),

transforms.Normalize(mean=[0.5, 0.5, 0.5],std=[0.5, 0.5, 0.5])

# transforms.Normalize(mean=0.5, std=0.5)

])

device = torch.device('cuda')

model = SiameNetwork().to(device)

model.load_state_dict(torch.load('parameter.pkl')) # load

model.eval()

judge_root = 'E:/Table/學習數據集/find_iu/待分辨人臉/'

img_judge = os.listdir(judge_root)

new_path = 'E:/Table/學習數據集/find_iu/IU_pic/'

result = []

n = 0

for i in range(len(img_judge)):

try:

img = Image.open(judge_root + img_judge[i])

except:

print('a file error, continue')

continue

else:

img = img.convert('RGB')

print(judge_root + img_judge[i])

input = data_transform(img)

input = input.unsqueeze(0) # 這里經過轉換后輸出的input格式是[C,H,W],網絡輸入還需要增加一維批量大小B

# 增加一維,輸出的img格式為[1,C,H,W]

input = Variable(input.cuda())

output = model(input) # 將圖片輸入網絡得到輸出

_, id = torch.max(output.data, 1) # 0是IU,1是其他

if id.item() == 0:

shutil.copy(judge_root + img_judge[i], new_path)

n += 1

print('/n在%d張圖片中,共找到%d張IU的圖片'%(len(img_judge), n))

#############################################################################################

4.file_deal.py

import os

raw_train_root_1 = 'E:/Table/學習數據集/find_iu/data/raw/train/IU/'

raw_train_root_2 = 'E:/Table/學習數據集/find_iu/data/raw/train/Other/'

raw_test_root_1 = 'E:/Table/學習數據集/find_iu/data/raw/test/IU/'

raw_test_root_2 = 'E:/Table/學習數據集/find_iu/data/raw/test/Other/'

raw_roots = [raw_train_root_1, raw_train_root_2, raw_test_root_1, raw_test_root_2]

for path in raw_roots:

# 獲取該目錄下所有文件,存入列表中

fileList = os.listdir(path)

n = 0

for i in fileList:

# 設置舊文件名(就是路徑+文件名)

oldname = path + os.sep + fileList[n] # os.sep添加系統分隔符

# 設置新文件名

newname = path + os.sep + str(n) + '.JPG'

os.rename(oldname, newname) # 用os模塊中的rename方法對文件改名

print(oldname, '======>', newname)

n += 1

5.network.py

import torch

import torch.nn as nn

class SiameNetwork(nn.Module):

def __init__(self):

super(SiameNetwork, self).__init__()

# input: h=112, w=92

self.conv1 = torch.nn.Sequential(

torch.nn.Conv2d(in_channels=3, # 輸入單通道

out_channels=16, # 16個3*3卷積核

kernel_size=3, # 卷積核尺寸

stride=2, # 卷積核滑動步長, 1的話圖片大小不變,2的話會大小會變為(h/2)*(w/2)

padding=1), # 邊緣填充大小,如果要保持原大小,kernel_size//2

torch.nn.BatchNorm2d(16), # 標準化,前面卷積后有16個圖層

torch.nn.ReLU() # 激活函數

) # output: h=56, w=46

self.conv2 = torch.nn.Sequential(

torch.nn.Conv2d(16, 32, 3, 2, 1),

torch.nn.BatchNorm2d(32),

torch.nn.ReLU()

) # output: h=28, w=23

self.conv3 = torch.nn.Sequential(

torch.nn.Conv2d(32, 64, 3, 2, 1),

torch.nn.BatchNorm2d(64),

torch.nn.ReLU()

) # output: h=14, w=12

self.conv4 = torch.nn.Sequential(

torch.nn.Conv2d(64, 64, 2, 2, 0),

torch.nn.BatchNorm2d(64),

torch.nn.ReLU()

) # output: h=7, w=6

self.mlp1 = torch.nn.Linear(7 * 6 * 64, 100) # 需要計算conv4的輸出尺寸,每次卷積的輸出尺寸(size - kernal + 2*padding)/stride + 1

self.mlp2 = torch.nn.Linear(100, 10)

def forward(self, x):

x = self.conv1(x)

x = self.conv2(x)

x = self.conv3(x)

x = self.conv4(x)

x = self.mlp1(x.view(x.size(0), -1)) # view展平

x = self.mlp2(x)

return x

6.utils.py

import matplotlib as mpl

import matplotlib.pyplot as plt

import numpy as np

import cv2

# 繪制訓練、測試的損失、準確度

#############################################################################################

def draw_result(draw_epoch, path):

show_loss = np.loadtxt('%s/train_loss.txt' % (path)) # 讀取txt文件,不同優化器的損失

show_train_acc = np.loadtxt('%s/train_acc.txt' % (path)) # 讀取不同模型的準確度

show_val_loss = np.loadtxt('%s/val_loss.txt' % (path)) # 讀取txt文件,不同優化器的損失

show_val_acc = np.loadtxt('%s/val_acc.txt' % (path)) # 讀取不同模型的準確度

mpl.rc('font',family='Times New Roman', weight='semibold', size=9) # 設置matplotlib中所有繪圖風格的設置

font1 = {'weight' : 'semibold', 'size' : 11} #設置文字風格

fig = plt.figure(figsize = (7,5)) #figsize是圖片的大小`

ax1 = fig.add_subplot(2, 2, 1) # ax1是子圖的名字

ax1.plot(draw_epoch, show_loss,color = 'red', label = u'AdaPID', linewidth =1.0)

ax1.legend() #顯示圖例

ax1.set_title('Training Loss', font1)

ax1.set_xlabel(u'Epoch', font1)

ax2 = fig.add_subplot(2, 2, 2)

ax2.plot(draw_epoch, show_val_loss,color = 'red', label = u'Adam', linewidth =1.0)

ax2.legend() #顯示圖例

ax2.set_title('Validation Loss', font1)

ax2.set_xlabel(u'Epoch', font1)

ax3 = fig.add_subplot(2, 2, 3)

ax3.plot(draw_epoch, show_train_acc,color = 'red', label = u'Adam', linewidth =1.0)

ax3.legend() #顯示圖例

ax3.set_title('Training Accuracy', font1)

ax3.set_xlabel(u'Epoch', font1)

ax4 = fig.add_subplot(2, 2, 4)

ax4.plot(draw_epoch, show_val_acc,color = 'red', label = u'Adam', linewidth =1.0)

ax4.legend() #顯示圖例

ax4.set_title('Validation Accuracy', font1)

ax4.set_xlabel(u'Epoch', font1)

plt.subplots_adjust(left=None, bottom=None, right=None, top=None, wspace=None, hspace=0.45) # hspace為子圖上下間距

plt.savefig('%s/show_curve.jpg' % (path), dpi=300)

#############################################################################################

# 用于解決cv.imread不能讀取中文路徑的問題

#############################################################################################

def cv_imread(filePath):

# 核心就是下面這句,一般直接用這句就行,直接把圖片轉為mat數據

cv_img = cv2.imdecode(np.fromfile(filePath, dtype=np.uint8), -1)

# imdecode讀取的是rgb,如果后續需要opencv處理的話,需要轉換成bgr,轉換后圖片顏色會變化

# cv_img=cv2.cvtColor(cv_img,cv2.COLOR_RGB2BGR)

return cv_img

#############################################################################################

總結

總體而言,這是一個新人的興趣之作,但是限于GPU性能無法使用太復雜的網絡,最后識別的效果不佳,若讀者有興趣,也可以去替換一下網絡,改善一下數據集,嘗試提升識別性能。更多相關人臉識別內容請搜索腳本之家以前的文章或繼續瀏覽下面的相關文章,希望大家以后多多支持腳本之家!

您可能感興趣的文章:- 用Python做個個性的動畫掛件讓桌面不單調

- 自己用python做的一款超炫酷音樂播放器

- Python做個自定義動態壁紙還可以放視頻

- 使用python svm實現直接可用的手寫數字識別

- 基礎語音識別-食物語音識別baseline(CNN)

- 詳細過程帶你用Python做車牌自動識別系統